Beyond the Chatbot: Exploring the Future of “Physical AI” at AWS re:Invent 2025

Note: This article is based on a report from Tech Evangelist Kohei “Max” Matsushita published here.

For years, Artificial Intelligence has been confined to the digital world – living in our browser tabs as chatbots or behind mobile apps as predictive algorithms. But as we saw at AWS re:Invent 2025, the “on-screen” era of AI is coming to an end.

Soracom Tech evangelist Kohei “Max” Matsushita recently took the stage at the Las Vegas show to demonstrate a paradigm shift: Physical AI. By combining Edge AI processing with robust IoT connectivity, we are now moving into an era where AI doesn’t just suggest a response, it moves objects in the real world.

What is Physical AI?

Physical AI is the practical application of AI where a system observes the real world through sensors, assesses the situation via a “brain,” and executes an action using physical equipment like robotic arms or industrial actuators.

Think of it as the completion of the OODA Loop (Observe, Orient, Decide, Act). In traditional IoT, we focused on the “Observe” (sensors). With Physical AI, we are handing the “Decide” and “Act” phases over to intelligent, situation-adaptive models.

The 100ms Barrier: Why Latency is the Ultimate KPI

In the digital world, a two-second delay on a webpage is an annoyance. In Physical AI, robotic arms often need response times of under 500ms.

Human perception begins to feel a “lack of response” at roughly 100ms. This is the “Gold Standard” for designing real-world AI systems. To hit this mark, developers often assume they must rely solely on Edge AI. However, Max’s demonstration at re:Invent challenged this assumption.

The Latency Reality Check:

- Cloud Latency: Even with a round-trip from Las Vegas to an East Coast server, app-level latency can be controlled within 150ms if the region is optimized.

- The Verdict: While Edge computing is vital for autonomy, Physical AI can be achieved through a hybrid cloud approach if your IoT communication environment is stable enough.

Beyond Rule-Based Control: Enter the VLA Model

The standard for robotics has long been “rule-based control,” (i.e. if-this-then-that programming). If an object is 1cm out of place, a rule-based robot fails.

Physical AI solves this using VLA (Vision-Language-Action) models. Instead of hard-coded coordinates, VLA models generate “Actions” directly from “Vision” (camera data) and “Language” (prompts like “pick up the black object”).

At the re:Invent demo, Max showcased a Raspberry Pi 5-powered robotic arm (the SO-101) using the LeRobot framework. By using imitation learning, the robot wasn’t just following a path; it was observing its environment and adapting its grip in real-time.

The Secret Ingredient: Updateability Over Superiority

A common mistake in AI deployment is chasing the “best” model. In the real world, the “best” model is the one that can be updated the fastest.

The physical world is unpredictable. An AI model that is static is a model that is becoming obsolete. To maintain a competitive edge, your Physical AI architecture must treat the model as a “living” component.

AWS IoT Greengrass: Bringing the Cloud to the Edge

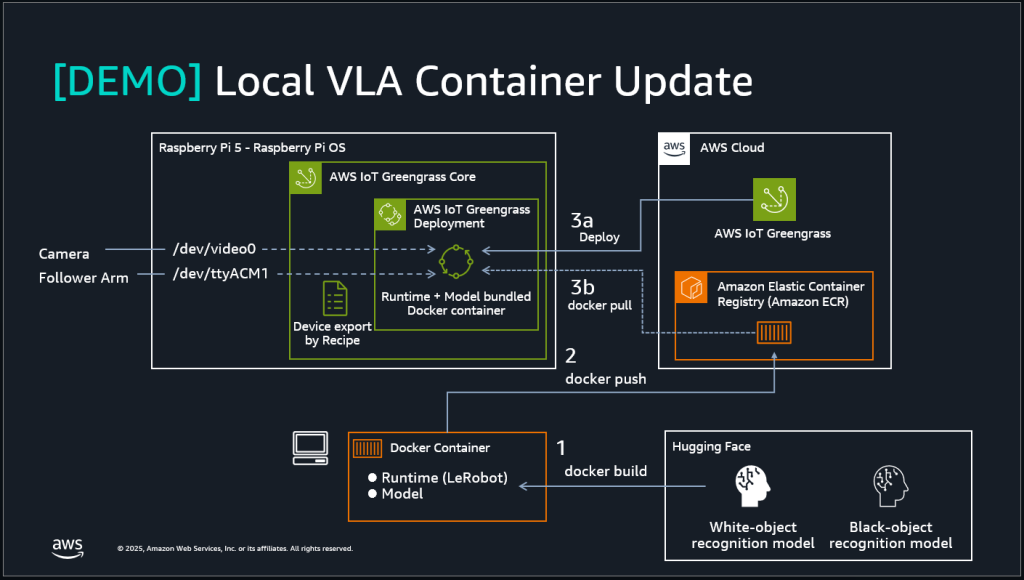

To solve the updateability problem, we utilize AWS IoT Greengrass. This allows us to manage and distribute AI models as containerized “components.”

- Containerize: We build the VLA model (e.g., from Hugging Face) into a Docker container.

- Push: Upload to the Amazon Elastic Container Registry (ECR).

- Deploy: AWS IoT Greengrass pushes that container to a Raspberry Pi in the field, refreshing the robot’s “brain” without anyone ever touching the hardware.

Connectivity: The Lifeline of Real-World AI

If communication is cut off, your AI is trapped. In Physical AI, LTE and 5G connectivity are not just infrastructure, they are the “lifeline” that keeps the AI fresh.

For the re:Invent demo, we ensured a reliable connection using the Soracom Onyx LTE USB Dongle and the Soracom IoT SIM. This setup provides a stable path for model updates, ensuring that even in unstable field environments, the “path for switching AI” remains open.

Summary: Freeing AI from the Screen

Physical AI represents a new form of computing—one that has limbs, senses, and the ability to solve problems in our physical space. By combining the responsiveness of Edge AI, the updateability of AWS IoT Greengrass, and the reliable “lifeline” of Soracom connectivity, we are building a Real World AI Platform that drives change far beyond the screen.

Ready to bring your AI into the physical world?

Explore how Soracom’s IoT SIMs and Onyx LTE Dongles provide the stable foundation your Physical AI needs to stay up-to-date and autonomous. Contact us today to learn how we can help.